Data Democratization: Power Your Organizations with Data Accessibility

In today's digital age, data reigns supreme as the lifeblood of organizations across industries. From enabling informed decision-making to driving…

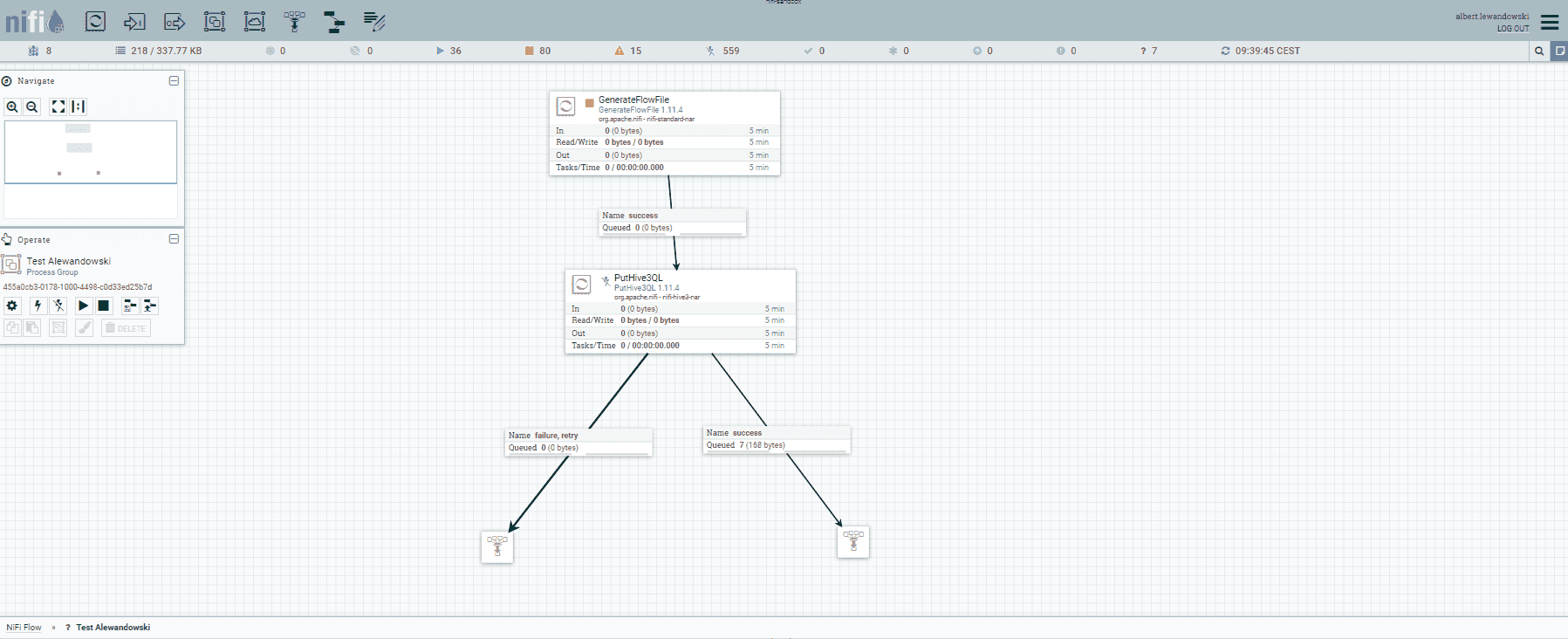

Read moreApache NiFi is a popular, big data processing engine with graphical Web UI that provides non-programmers the ability to swiftly and codelessly create data pipelines and free them from those dirty, text-based methods of implementation. It causes NiFi to be a widely used tool that offers a wide range of features.

If you are interested in reading more about using Apache NiFi on production from the developer perspective and all tradeoffs that comes with its simplicity, then you should read the blog series:

Kubernetes is an open source system for managing containerized applications across multiple hosts. It is becoming more and more popular, also in the Big Data world, as it is a mature solution nowadays and interesting for many users. Besides being popular, Kubernetes provides the possibility of faster delivery of the results and simplifies deployment and updates (when we have prepared the CICD and Helm charts already).

Moving some applications to Kubernetes is pretty straightforward, but this is not the case with Apache NiFi. It is a statefulset application and in most cases it is deployed as the cluster on the bare-metal or virtual machine. It’d be perfect if not for one important thing about its architecture: each NiFi node does not share or replicate processing data between cluster nodes. This makes the whole process more complicated. Fortunately, there is a solution to overcome NiFi’s problems related to its work in the cluster mode that requires additionally installed Zookeeper. This idea is about splitting the pipelines into separate NiFi instances while each instance would work as the standalone. It seems to be the right way to create the stable NiFis on Kubernetes and we can easily manage their configurations from the repository by using, for example, Helm charts with a dedicated values file.

Here we come to the first important thing about NiFi. NiFi utilizes a great load of read and writes on disk, especially when we have a lot of pipelines that constantly read data, run operations on them and send data or schedule and monitor the processes based on the content of data flow. It requires pretty performant storage under the hood. Any slow disk or network storage doesn’t seem to be the right solution so here I recommend using the object storage (on-premise, like CEPH) or faster storage. In case of a high load of NiFi, the right solution would be to use local disk SSD, but this will not provide real High Availability of the service.

The second thing is the network performance between Kubernetes clusters and Hadoop clusters or Kafka clusters. Surely, we read data from the source, process it and then save it somewhere - we have to have a stable, fast network connection to be sure that there won't be any bottlenecks. This is especially important during saving the file, like saving the file to HDFS and then rebuilding the Hive table.

The third thing is the migration process. It is not about changing the NiFis in one day - we should schedule a plan for at least a week to move pipeline after pipeline and check if NiFi works as expected.

The fourth challenge is about making the right CICD pipeline for our needs. This, in fact, consists of multiple layers. The first is about building the base Docker image, here we can also use the official one. It is related to the building of specific images that contain our custom NARs - fortunately, using multistage Dockerfiles works in these cases and is the right choice here. The second part of it is about creating the deployment to Kubernetes. Using Helm or Helmfile are extensive, prepared for being used as the base for many deployments, and it's easy to learn about all details.

The fifth challenge focuses on exposing NiFi to the users. In the Kubernetes world, we can expose the application by using the service or ingress that is straightforward when we talk about NiFi with HTTP, but it becomes more complicated with HTTPS. In the Ingress, it is required to have set up the option responsible for making the SSL passthrough. The one problem that occurred in the project was that the certificate from Ingress was treated by NiFi as the try of the user authentication. On the other hand, it would work with the following scenario: HTTPS traffic is routed to the webservice responsible for the SSL termination and encrypts the traffic once again to NiFi.

The sixth challenge is based on monitoring. We mainly use Prometheus in our projects on-premise, and the configuration of the Prometheus exporter is as simple as adding a separate process in the NiFi responsible for pushing the metrics to the PushGateway from which Prometheus can read it. Here we come to the issue of NiFi - there appears to be memory leaks in some processors. It is crucial to start running NiFi in Kubernetes, monitoring usage of its resources and verifying how it handles processing data. One piece of advice: set up the minimum value for JVM at the same level and leave a bigger difference between the JVM memory value and the limit of RAM for the whole pod.

The seventh part is about managing certificates, because there are multiple ways to achieve this. The simplest solution is to generate a certificate each time using the NiFI Toolkit and run it as the sidecar for NiFI. Another one is to use the created certificate, created truststore and keystore (store them as secret files as Kubernetes secrets or as recommended, by using a secret manager like Google Cloud Secrets Manager or Hashicorp Vault), then mount it to the NiFi statefulset. If we need to add a certificate to the truststore, we can import it by re-uploading truststore or import it dynamically during each start.

The Helm chart of the Apache NiFi and NiFi Registry is available here.

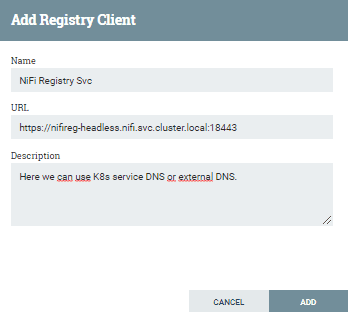

Apache NiFi Registry was created to become a kind of Git repository for Apache NiFi pipelines. Under the hood, NiFi Registry uses a Git repository and stores all the information on the changes within its own database (by default, it is a H2 database but we can also set up PostgreSQL or MySQL database instead). It is not a perfect solution, there are a lot of difficulties with using it as part of the CICD pipeline but, in all honesty, it seems to be the right solution for the NiFi ecosystem to store all pipelines and their history in one place. The biggest challenge with managing it seems to be storing and updating its keystore and truststore, exactly like with Apache NiFi.

As the Apache NiFi, NiFi Registry is also a stateful application. It requires storage for a locally cloned Git repository and database (if we choose to use default H2). Here I recommend using PostgreSQL or MySQL which is a robust solution in comparison to H2 and we can manage it separately from the NiFi Registry.

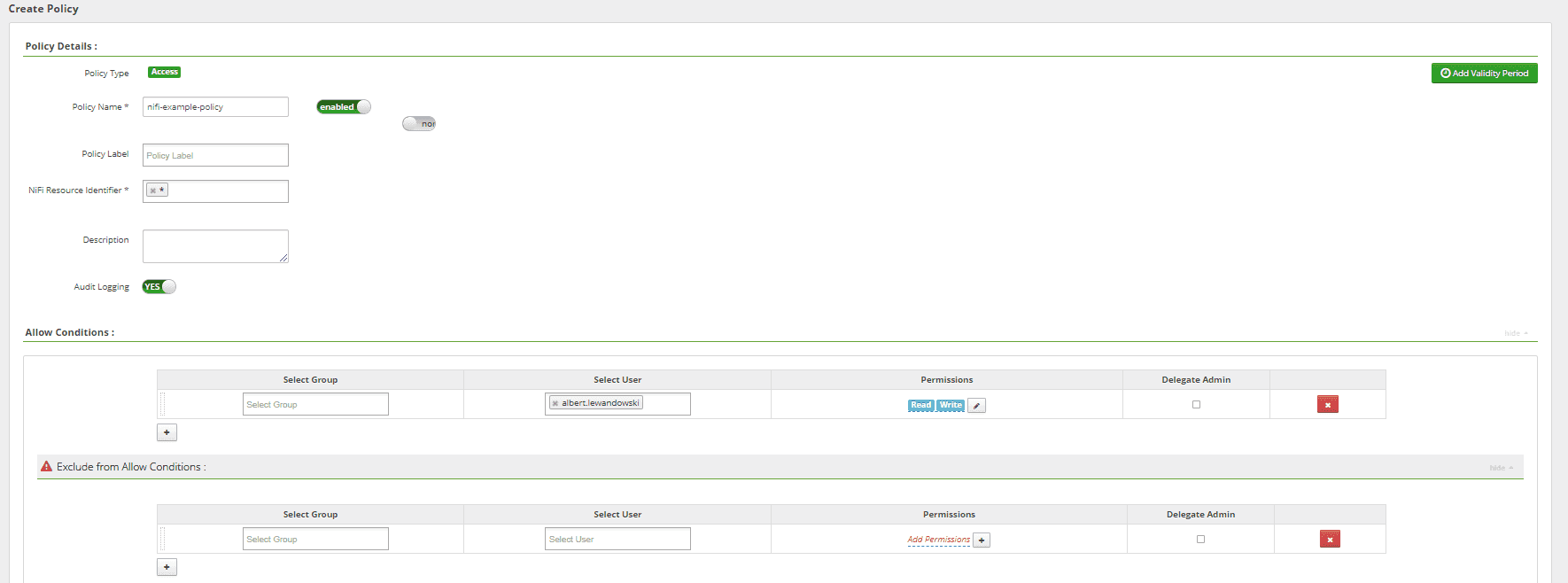

An important aspect of any enterprise-grade data platform is managing permissions to all services. The most basic setup uses managing permissions, users and groups directly in Apache NiFi or Apache NiFi Registry, but thankfully we can use something complex and flexible. Here I mean Apache Ranger. It is a framework to enable, monitor and manage comprehensive data security across the Hadoop platform.

With its extensive features, we can easily set up NiFi and NiFi Registry policies directly in the GUI or via REST API and, moreover, see the audit with information about access denied for any user that will not be accepted due to the Ranger policies. In this case, we would need to configure Ranger Audit in NiFis and set up the connection to Infra Solr and HDFS, used for storing hot and cold data about audit.

The basic requirements are about having installed Apache Ranger

The first step of connecting Ranger and NiFi is about building the new Dockerfile with Ranger plugin for each NiFi. The best way to achieve this is to create a multistage Dockerfile in which we can build the plugin and then add it to the layer with NiFi itself. You can see the example in the repository.

The second one requires adding new policies in the Ranger which we can test simply during the first run of our secured setup.

The next is to create all files required by Ranger like the configuration of its connection to the Ranger from NiFi, audit settings and updating NiFi authorizers to use the Ranger Plugin. Then we need to start NiFis and verify if it works as expected.

Setting up Kerberos within the NiFi is a piece of cake after finishing the deployment process. We need to remember the connection between the Kubernetes cluster and Kerberos service providers like FreeIPA or AD. The most important part is about creating the headless keytab that does not have them within its principal to simplify management of keytabs for NiFis. Moreover, we need to mount krb5.conf file and install Kerberos client packages.

Apache NiFi is one of the most popular Big Data applications and has been used for a long time. There have been multiple releases and it’s a great part of the solution that has become quite mature. We can find many services like NiFi Registry or NiFi Tools within its own ecosystem. Using Kubernetes as the platform for running NiFI simplifies the deployment, management, upgrading and migration processes that are complex with the older setups.

Surely, Kubernetes is not the remedy for all issues with NiFi, but it may be a useful next step to make the NiFi platform a better one.

In today's digital age, data reigns supreme as the lifeblood of organizations across industries. From enabling informed decision-making to driving…

Read moreIn today's world, real-time data processing is essential for businesses that want to remain competitive and responsive. The ability to obtain results…

Read moreOne of the main challenges of today's Machine Learning initiatives is the need for a centralized store of high-quality data that can be reused by Data…

Read moreA data-driven approach helps companies to make decisions based on facts rather than perceptions. One of the main elements that supports this approach…

Read moreBig Data Technology Warsaw Summit 2020 is fast approaching. This will be 6th edition of the conference that is jointly organised by Evention and…

Read moreYou have just installed your first Kubernetes cluster and installed Istio to get the full advantage of Service Mesh. Thanks to really awesome…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?