How to build an e-commerce shopping assistant (chatbot) with LLMs

In the dynamic world of e-commerce, providing exceptional customer service is no longer an option – it's a necessity. The rise of online shopping has…

Read moreWhen it comes to machine learning, most products are designed to work in batches, meaning they process data at fixed intervals rather than in real-time. This approach is often easier to manage, and in many cases, meets the needs of the business. However, there are situations where real-time machine learning is essential. In this article, we will explore why.

To understand why real-time machine learning is important, it's helpful to first understand the different paradigms of deployment for machine learning solutions.

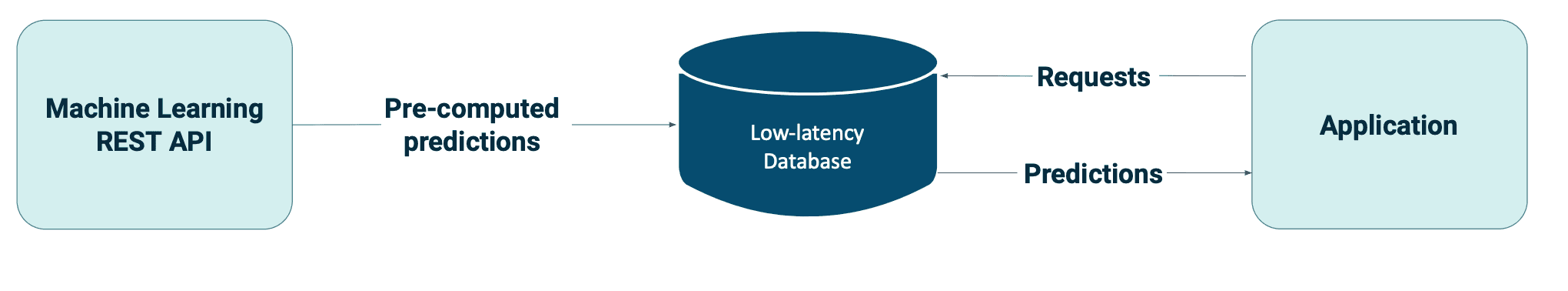

According to Databricks, batch deployment is the most common way of deploying machine learning models, accounting for approximately 80-90% of all deployments. This means running predictions from a model and saving them for later use. For live serving, results are saved to a low-latency database that will serve the predictions quickly. Alternatively, predictions can be stored in less performant data stores.

At this stage, all predictions are precomputed periodically (e.g. daily) before a prediction request arises. Batch processing systems like Spark or MapReduce are used to process large amounts of data efficiently. By caching precomputed predictions, we also decouple computation from serving.

Typical use cases for batch predictions include churn prediction, customer segmentation, and sales forecasting. These use cases do not typically require real-time data.

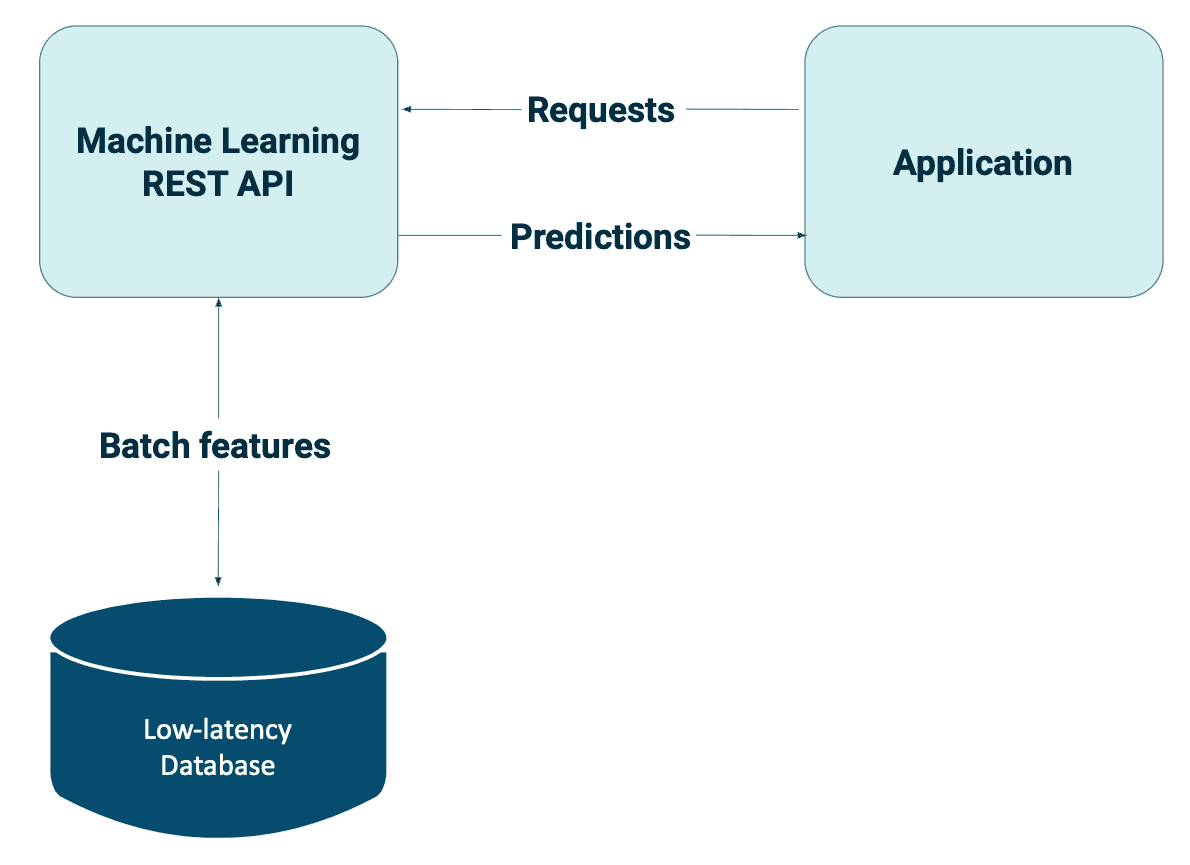

Although real-time deployments represent a smaller share of the deployment landscape, they often involve high value tasks that provide significant business value. Real-time deployments can either use batch features or streaming features. It’s important to note that while features in real-time deployments might be processed online, the training of the machine learning models is generally done in batch mode. This is also to be distinguished from another area called Online ML, where not only inference data but also training data arrives in online mode, and specialized versions of ML algorithms are trained continuously. This last case, however, is not in the scope of this article.

Batch (static) features are pieces of information that rarely or never change, such as customer demographics (date of birth, gender, income) or product attributes (color, size, product category and images).

Streaming (online) features, on the other hand, are computed from streaming data and are based on real-time events. Examples include aggregate streamed events (such as impressions, clicks, likes, or purchases), social media data (such as sentiment analysis or trending topics), or sensor data from IoT devices.

Here are a few examples of real-time machine learning applications:

While batch predictions are useful and sufficient for most use cases, they do have many limitations. For example, consider an ecommerce website that wants to predict the optimal discount for a user based on the products in their basket. The number of possible combinations of different products is unfeasible to precompute. Another example is chatbot, as it can be asked an endless variety of questions, often with a mixed order of words. The combinations and permutations of words and phrases are virtually infinite.

Even in use cases where all predictions can be generated in advance, it can be extremely wasteful to do so. Consider a recommender system designed to predict items for customers as an example. Generating a massive number of records periodically would not only be costly in terms of resource computation and usage, but it would also be time-consuming to generate millions of predictions. Why generate all predictions in advance if you can generate each prediction as needed?

This is where online predictions with batch features come in. Instead of precomputing all possible predictions before requests arrive, online predictions are served as soon as requests arrive. This is also known as on-demand prediction. Online serving can help prevent redundancy. For example, by generating predictions only for users who are visiting your website.

Very common batch features used for online serving are embeddings. In machine learning, embeddings are a way of representing data as vectors or low-dimensional numerical representations that capture the key features of the data. These numerical representations are often learned through neural networks and can be used to represent a variety of types of data, such as words, images, or even entire documents. By using embeddings, machine learning models can more easily process and understand complex data, leading to better performance in tasks such as classification, clustering and recommendation systems.

However, moving your models from offline to online presents some challenges, the first of which is inference latency. Several studies show that website speed improvements can significantly affect business metrics such as conversion rates or average order value. Therefore, we need models that can generate predictions at a reasonable speed. There are several ways to keep your latency low, including selecting only the most informative features, pre-computing features, using simpler or smaller models, using more compute resources, and using model quantization. Another challenge is ensuring sufficient throughput and availability. Once you deploy your model, it must always be available with good uptime.

As you can see, online deployment can be one of the more complicated ways of deploying models.

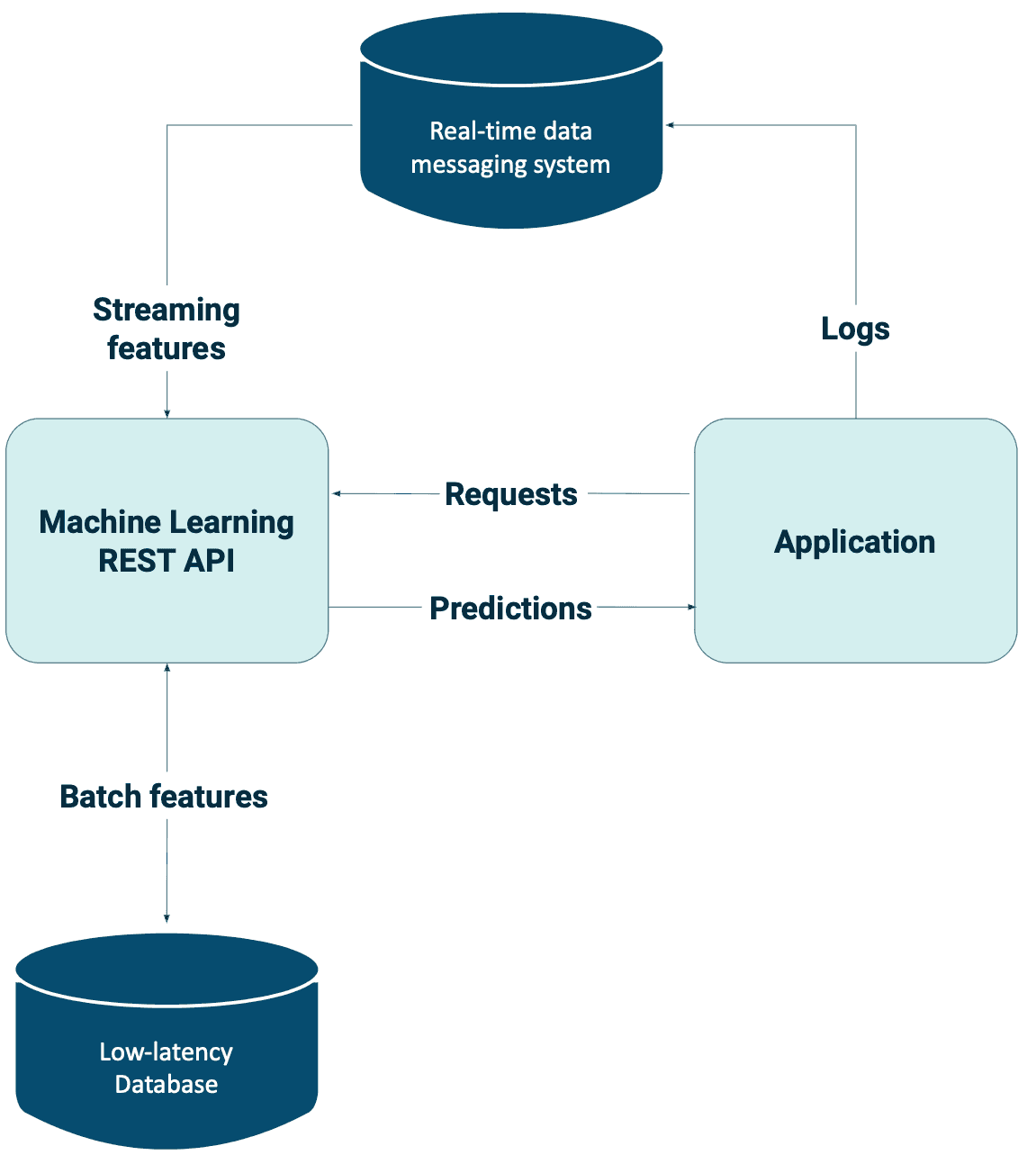

Static features also have limitations, as they make your models less responsive to changing environments. Switching to online predictions with streaming features allows you to use dynamic features to make more relevant predictions. For many problems, you need both streaming and batch features, which can be joined together in a single data pipeline and fed into your machine learning models.

Streaming features are especially useful in mission-centric contexts, where users visit your application with a pre-existing goal. Providing them with the right content or functionality in real-time is critical to keeping them engaged and preventing them from switching to competitors.

Let's say you're booking a hotel for your next vacation. Previously, you visited a specific country, and the website remembers this information, recommending hotels based on your previous travel destination. However, you want your recommendations updated based on the current context, considering any changes in your travel preferences or interests since your last trip. The recommendations may not be as relevant or useful if the website only relies on static features like your previous travel history. In this case, it would be beneficial to incorporate dynamic features that consider real-time data such as your current location, search history, and recent interactions with the website. By doing so, the website can provide more personalized and up-to-date recommendations that better reflect your current travel preferences.

Nevertheless, incorporating streaming data adds significant complexity to your applications. It would help if you had appropriate real-time pipelines to utilize real-time predictions from streaming data fully. Technologies such as Apache Flink or Apache Kafka enable the computation of streaming features. However, stream processing poses unique challenges due to the unbounded amount of data that arrives at variable rates and speeds.

Moreover, online predictions are designed to make real-time decisions, meaning they can immediately impact the system or application they are supporting. This means that model monitoring for errors or negative feedback loops is critical to prevent issues from quickly escalating and causing further damage.

While real-time processing may seem attractive, it is only sometimes straightforward and can come with additional costs and complexities. For many common use cases, batch processing is an excellent approach, as it is highly efficient. In contrast, stream processing is fast because it allows you to process data as soon as it arrives. However, it is important to carefully consider the trade-offs before adopting true real-time streaming. Only do so after identifying a business use case that justifies the additional complexity and cost compared to batch processing.

Let's start with the definitions. What really is fraud detection?

Fraud detection is the process of identifying and preventing fraudulent activity within a system, organization or network. Fraud can take many forms, such as:

Fraudulent activities can result in significant financial losses, reputation damage and legal consequences for individuals and organizations. Fraud detection involves the use of various methods and technologies to detect and prevent fraudulent activity in real-time, including rule-based systems and machine learning algorithms. The goal of fraud detection is to identify and prevent fraudulent activity before it can cause harm, and to help ensure the integrity and security of systems and organizations.

We will focus on credit card fraud as this is the most common form of fraud in the industry.

Credit card fraud is a type of financial fraud that involves the unauthorized use of a credit card to make purchases or cash withdrawal. Here's how it typically works, step by step:

Examples of credit card fraud may include:

As a result, customers will be charged for items they did not purchase. It's important to note that credit card fraud can have serious consequences for both the cardholder and the issuer. Credit card fraud can have severe consequences, including but not limited to:

If fraudulent activity is detected, the cardholder is typically notified, and the fraudulent transactions are reversed. The card issuer may also block the card and issue a new one to the cardholder. Protecting credit card details and reporting any suspicious activity as soon as possible is essential.

If fraudulent activity is detected, the cardholder is typically notified and the fraudulent transactions are reversed. The card issuer may also take steps to block the card and issue a new one to the cardholder. It's important to take steps to protect credit card details and report any suspicious activity as soon as possible.

In today's world, transactions are processed almost instantly, providing customers with a seamless experience. However, this speed of processing also leaves banks and payment processors with a shorter time frame to identify and prevent fraud. In cases of online fraud, a thief may execute several transactions, resulting in significant financial losses. Therefore, it is essential that fraud detection occurs in near real-time. Delaying fraud detection until the next day may make it more challenging to recover the client's money.

Real-time fraud detection is critical because it allows businesses to respond quickly to real-time threats, preventing financial losses. Without real-time detection, fraud may only be detected after a financial loss has already occurred. Therefore, it is imperative to detect and react to real-time threats as they happen.

Fraud detection, much like cybersecurity, is an ongoing battle between attackers (fraudsters) and defenders (fraud detection systems). Fraudsters are constantly seeking new ways to profit from their malicious activities, while fraud detection systems are continuously challenged to stay one step ahead of them.

Machine learning algorithms can enable the dynamic detection of fraudulent transactions by analyzing large amounts of data to identify anomalous patterns associated with fraudulent activity. Incorporating streaming features into these models can enhance their responsiveness to changes. This means that financial institutions and cardholders can be immediately notified of suspicious transactions, allowing them to take swift action to prevent further fraudulent activity.

A total of $28.58 billion credit card fraud loss was suffered by credit card issuers, merchants, and consumers in 2020.

Real-time features can significantly enhance the performance of our model, which can have a major impact on credit card fraud prevention. Even a slight improvement in accuracy can translate into substantial savings, amounting to millions of dollars! In addition, features related to fraud detection require low latency processing from real-time data sources to ensure that the feature values remain fresh and up-to-date.

Here are some real-time features that we can consider to improve our machine learning models:

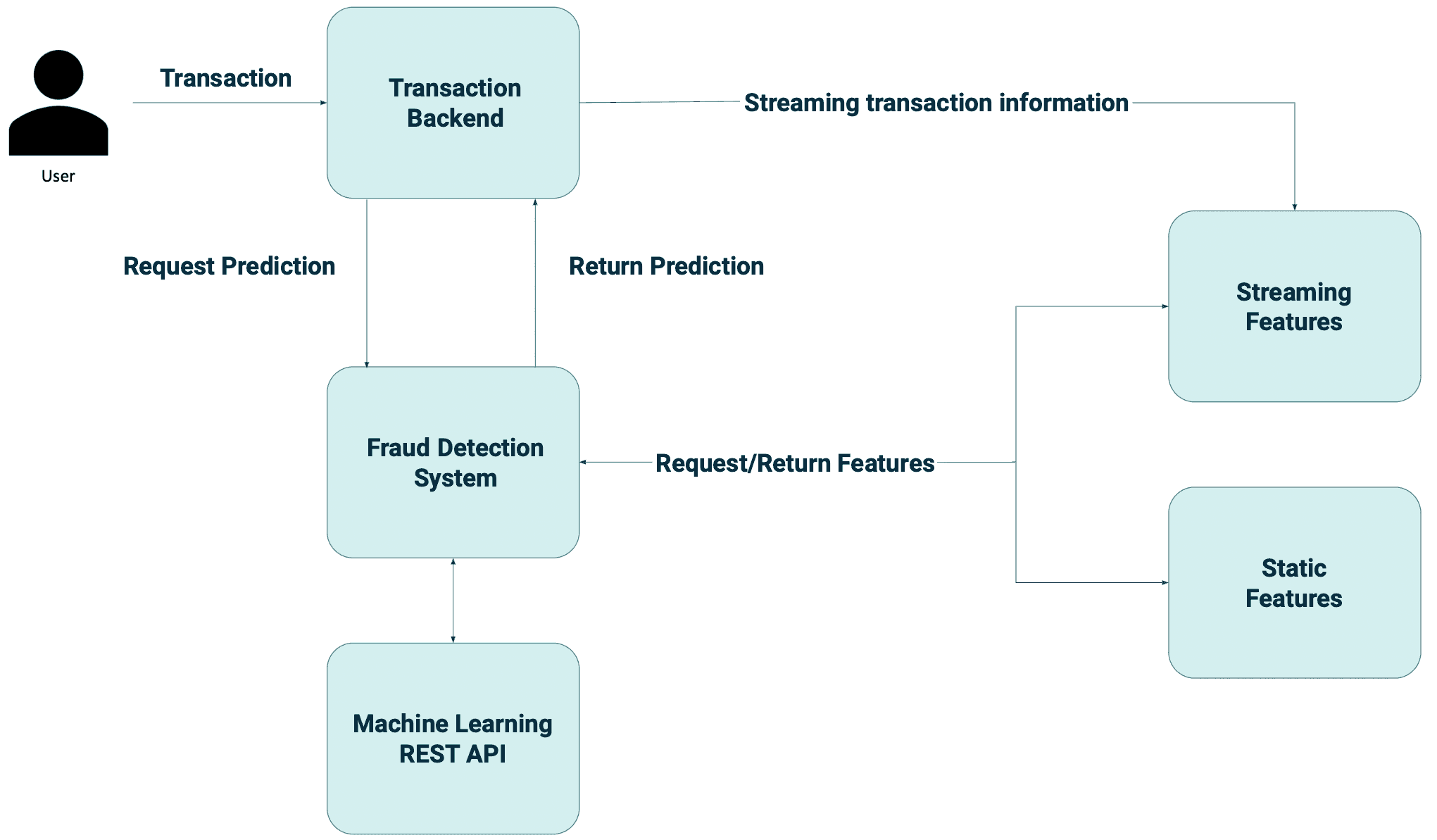

This is an example of what a real-time fraud detection system could look like.

It would work as follows:

The software infrastructure must be designed to handle a high volume of transactions. The system must also be fault-tolerant and scalable to ensure it can handle increased traffic. Furthermore, it is essential to have proper monitoring and alert systems in place to identify and resolve any issues that may arise.

Overall, real-time detection is crucial for credit card fraud prevention, because it enables individuals and businesses to respond quickly to fraudulent activity, limit financial losses and prevent unauthorized access to funds. This can help prevent additional fraudulent activity, limit financial losses and provide a better chance of recovering lost funds.

Interested in ML and MLOps solutions? How to improve ML processes and scale project deliverability? Watch our MLOps demo and sign up for a free consultation.

In the dynamic world of e-commerce, providing exceptional customer service is no longer an option – it's a necessity. The rise of online shopping has…

Read moreOn June 16, 2021, the Polish Insurance Association published the Cloud computing standard for the insurance industry. It is a set of rules for the…

Read moreModern Data Stack has been around for some time already. Both tools and integration patterns have become more mature and battle tested. We shared our…

Read moreData Studio is a reporting tool that comes along with other Google Cloud Platform products to bring out a simple yet reliable BI platform. There are…

Read moreIf you are looking at Nifi to help you in your data ingestions pipeline, there might be an interesting alternative. Let’s assume we want to simply…

Read moreWe are excited to announce that we recently hit the 1,000+ followers on our profile on Linkedin. We would like to send a special THANK YOU :) to…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?