Puzzles in the time of plague: truly over-engineered audio spectrum analyzer

Quarantaine project Staying at home is not my particular strong point. But tough times have arrived and everybody needs to change their habits and re…

Read moreThe 4th edition of DataMass, and the first one we have had the pleasure of co-organizing, is behind us. We would like to thank all the speakers for the mass of knowledge and experience you shared with us. Also, thanks to the participants for your support, networking and for contributing to the unique atmosphere of the conference.

Let’s now look at the top trends that we observed from the presentations, what representation appeared on stage, find out about the three top rated presentations and finally, let's see the review and takeaways from a cross-section of the presentations.

During the Summit we heard 23 presentations which were given by 28 speakers who came from all over the world: New York, New Orleans, Hamburg, Budapest and Warsaw. The main trends that were revealed by these presentations were:

Probably the most important insight is that it’s amazing how quickly companies can actually build their solutions using the cloud. With the current cloud offerings you iterate much faster than ever before. What's more, during this conference we saw many examples of real-world use of the cloud.

Our speakers represent a large number of data-driven companies from all over the world.

We would like to thank the invited speakers for their contributions of experience and knowledge.

We would also like to thank the community, because almost half of the presentations came from the Call for Presentations process.

Here are the top three listener-rated presentations:

You can read more below about this presentation in Grzegorz Kołpuć’s review.

Presentations review by Maciej Maciejko, Staff Data Engineer at GetInData

The Datamass Gdańsk Summit attracted a lot of Big Data enthusiasts and specialists. As a Data Engineer, I wouldn't have forgiven myself if I had missed that event. The conference was an opportunity to discover the leading trends in the brand and see how companies successfully deal with data processing.

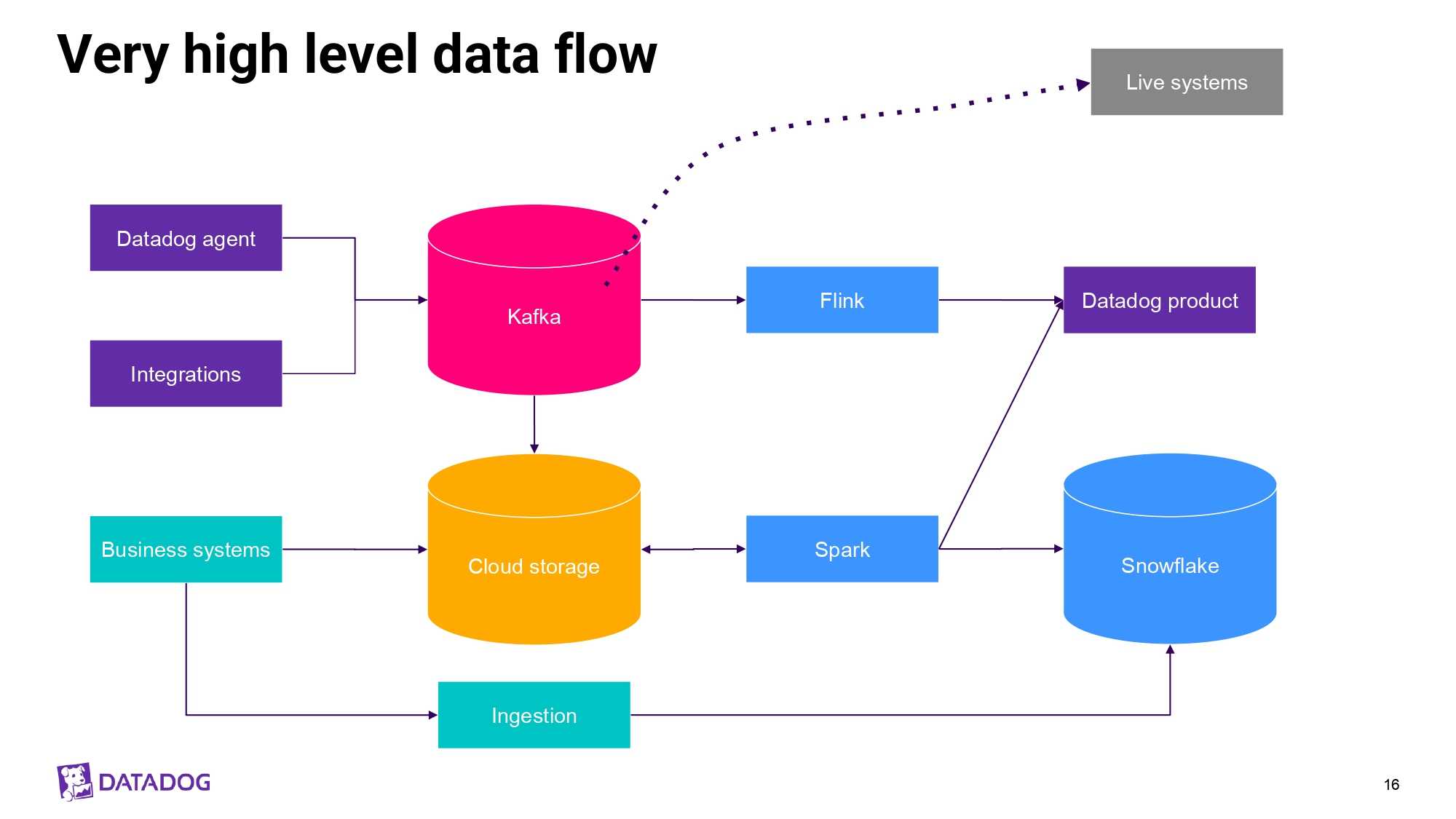

The first presenter - Wouter de Bie, explained how Data Dog (a cloud monitoring service) infrastructure works in a multi-cloud environment during the lecture “Data Infrastructure in a Multi-Cloud environment”. The key to success is cloud agnostic technology such as Kubernetes. To simplify, the data is processed in one environment - which is defined as a cloud and region. Only the results are allowed to be exchanged between environments. Wouter emphasized the meaning of metadata which is crucial to determine the producer, consumer and understand the flow. Data Dog uses Luigi for task orchestration, Flink, Spark for ETL and Snowflake for ELT.

Remarkably, the latest technology is getting more and more popular. During the presentation “Let’s build our own Cloud Data Platform” Łukasz Leszewski brought up the genealogy of Snowflake and explained why its architecture is better than traditional databases and how it simplifies working with ETL/ELT.

During the presentation “Bank Analytics in the Cloud” displayed the transformation process from expensive and unscalable on-premise issues to a “full-cloud” solution. He explained how to measure the overall performance of an IT department using multiple criterions such as time-to-production, CI/CD, speed of access to data and model advancements etc. Based on this it was easy to discover the strengths and weaknesses. The next step was the optimization of the whole process of development and delivery. Kedro was a very important feature in this, which allowed Data Scientists to “talk in one language”. At the end, Łukasz pointed out that centralized architecture leads to bottlenecks which can be solved by Data Mesh.

The Data Mesh concept uses decentralized architecture which allows domain teams to perform cross-domain analytics on their own. This was explained by Szymon Homa during the presentation “Data Mesh concept, executed by Trino”. Data mesh is based on 4 principles: Domain ownership, Data as a product, a Self service Data Platform and Federated Governance. That’s the theory. The more practical side of the presentation focused on Trino. It’s a very fast growing, open-source, distributed query engine. It allows the integration of almost any data source and query using simple sql. Data mesh with Trino can be a powerful alternative for data lakes and ETLs for companies with integrated, multi-domain products.

Márton Balassi gave a great lecture titled “Running Apache Flink in any cloud environment”. He presented a cloud agnostic solution with Kubernetes (again!) as the orchestration layer with the new Flink Kubernetes Operator, which plays the scheduler role. At least state management is easy, consistent and supported by the Flink community!

Marcin Szeliga convinced me during his presentation “Medical Image Analysis using Auto ML” that ML can be simple, cheap and extremely useful. You don’t have to know how to create ML models or have specialist domain knowledge to start working with them to get great results.

I also have to mention other leading technologies such as Apache Airflow (cron based scheduler) or dbt (data transformation) which are very commonly used, which could be observed in the other presentations.

The Datamass Gdańsk Summit was a great experience and I’m waiting for the next edition.

Presentations review by Grzegorz Kołpuć, Staff Data Engineer at Getindata

DataMass kicked off to a really strong start with Wouter de Bie, a former Spotify engineer who’s currently working for DataDog as Director of Engineering. In his presentation, Data Infrastructure in a Multi-Cloud environment he demonstrated the main focus of the industry at that time - Cloud. Wouter talked about how DataDog solves client-specific problems related to a variety of cloud vendors and geolocations/regions. Being cloud agnostic is definitely a trend on such platforms. DataDog developed an in-house solution (also based on Mortar acquisition) to manage the cluster lifecycle across multiple clouds. The shared experience taught a good lesson - take care of a good abstraction layer as well as using cloud agnostic components to let you go multi-cloud.

The Cloud area has been explored further with Łukasz Hunka’s Bank Analytics in the Cloud presentation and Łukasz Leszewski ’s Let’s build our own Cloud Data Platform. They presented a little bit more of a business oriented view, showing how to manage migration projects in a cost-effective manner. Snowflake as a modern data warehouse that was designed to be cloud native, and Lukasz Leszewski explained how engineering resource limits and infrastructure problems could be addressed out of the box.

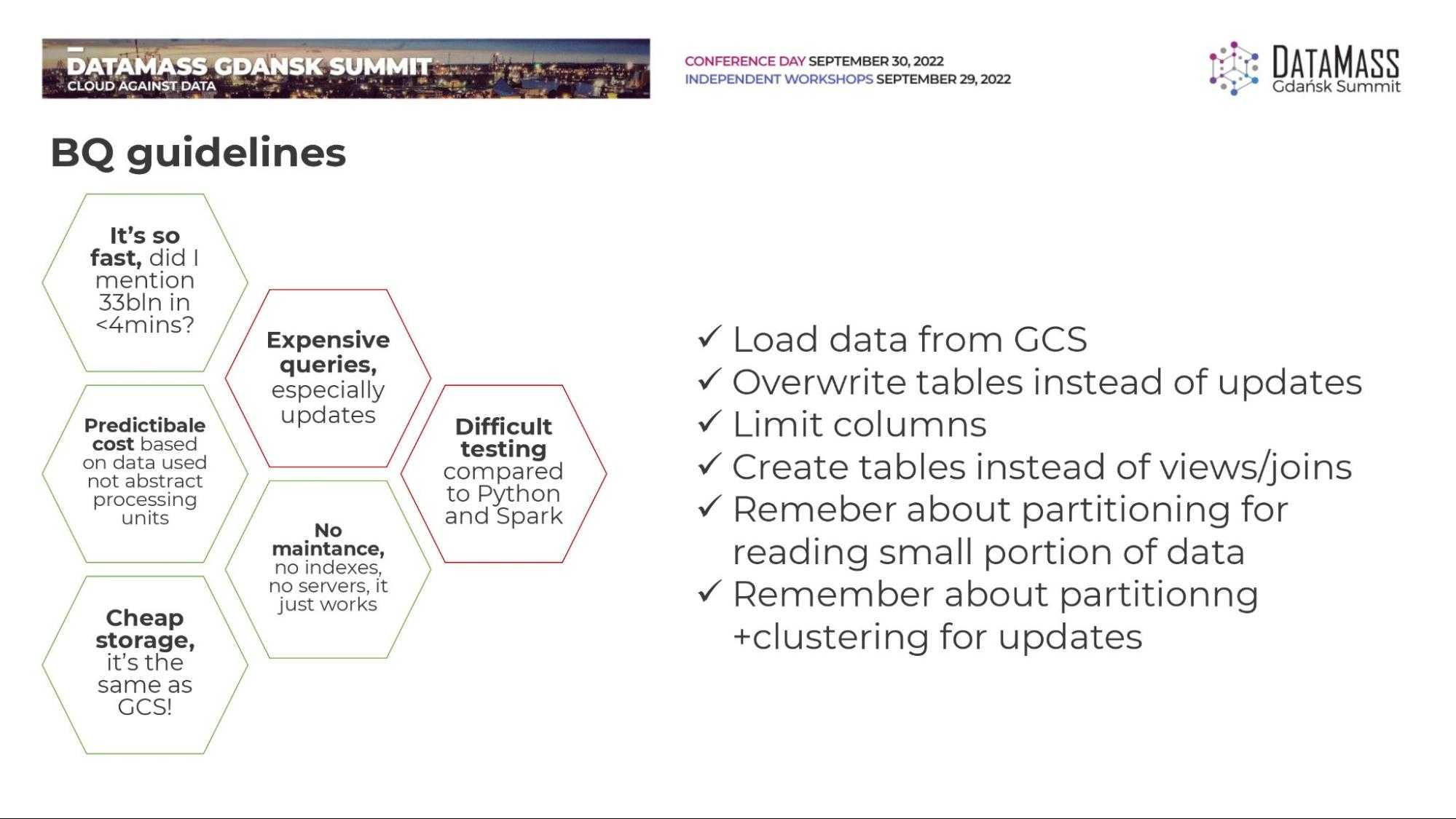

The conference definitely had a lot to offer to technical people. Grzegorz Gwoźdź demonstrated an interesting use case of tv/video on demand, which generates billions of real time events at Vectra - How to process 33bln events from set top boxes in under 4 minutes. Grzegorz is a well known Tricity geek, and as expected during the presentation, took a deep dive into the technical details of the data platform. ‘Keep it simple’ as he says, to share his lessons learned. Overcomplexity and covering every corner case imaginable may kill your development and explode your cloud bill. It was very interesting to see how GCP services were selected and what the crucial factors leading to the decision were. What I took out of that presentation was that pragmatism will help your project to succeed.

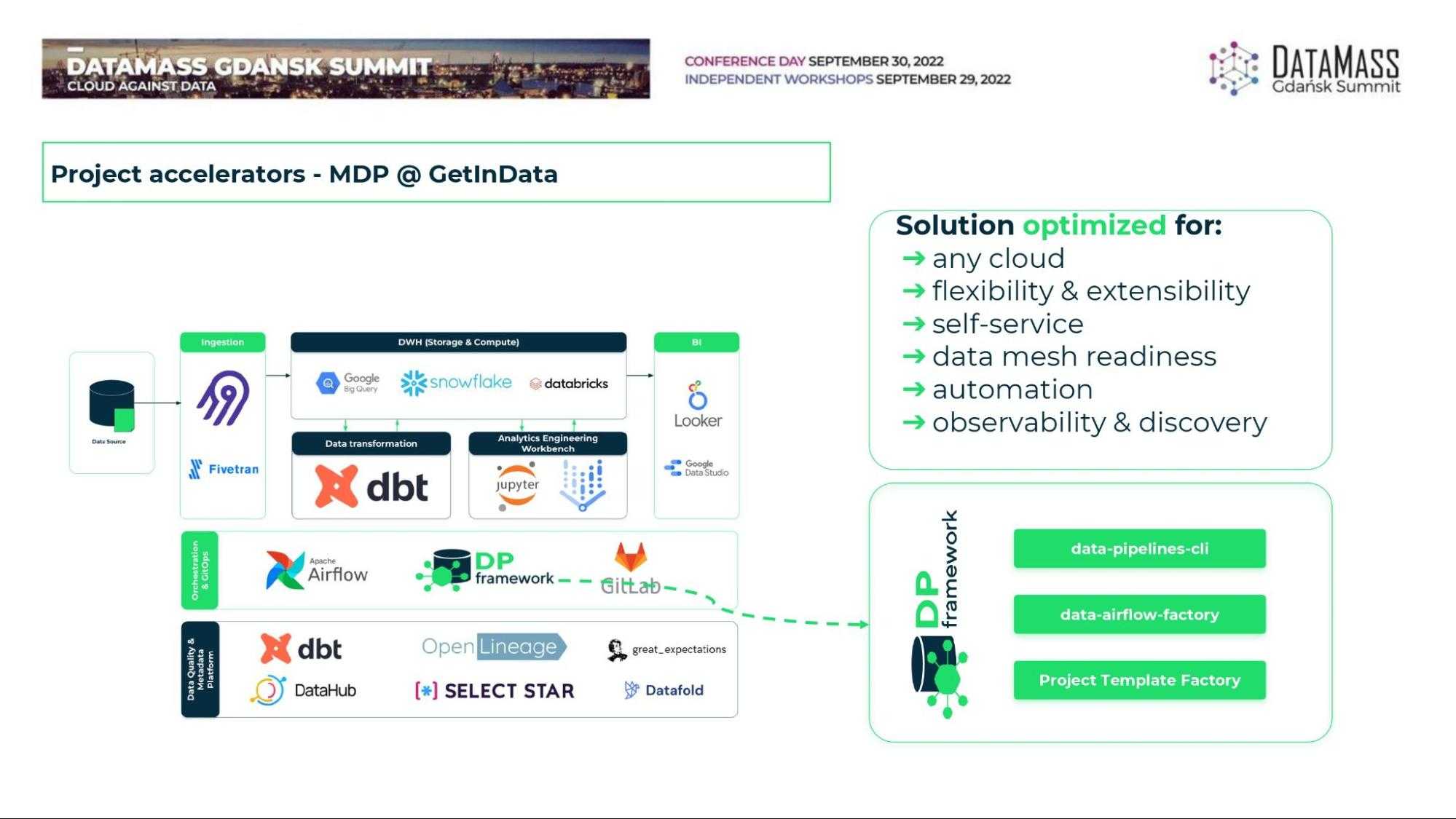

Also, our GetInData fellow Marek Wiewiórka, with his "From first contact to a full charge... How we built a Modern Data Platform in 4 months for a FinTech scale-up." was able to share his experience with building Data Platforms along with Daniel Owsianski and Daniel Tidström. The massive efforts made at GetInData to build a generic Modern Data Platform were utilized at Volt.io, where our team architected the solution end-to-end. Managed cloud services and internal R&D plugins were put together to provide a scalable and self-service environment for analytics engineers, data analysts and business users. Marek shared the story where the whole platform was deployed in just 4 months. That impressive timeframe was possible to achieve due to the ground work done previously at GetInData Labs. Volt.io is one of the first clients to benefit from it. A lot of what was in the presentation is covered in this blog post: How we built a Modern Data Platform in 4 months for Volt.io, a FinTech scale-up.

Recently I have been involved in a huge cloud migration project. Marcin Kaptur’s presentation "Don’t go with the flow. How did Ringier Axel Springer moved its data to the cloud?" impressed me a lot. It was semi technical, semi business talk, which gave a comprehensive summary of decisions made at the right time to make the migration process smooth. The crucial part of the process was to select a cloud provider with a flexible environment which offers a variety of services to use. Migration is sometimes approached in a lift and shift manner, but Marcin explained their choice of the ‘re-architect’ method. It was discovered that it was a way of reducing development and operation costs and having a more efficient solution at the end. Cost-effectiveness is a challenge when moving to the cloud. I really liked the important thing pointed out during the talk - ‘Tell developers about money’. To achieve cost effectiveness, engineers need to know what the impact of their decisions is and be responsible for the actions they take during development. The financial impact of service usage and solution design is part of cloud engineering.

As Maciej said, if you are a Data Engineer, being at such conferences is an opportunity to stay up to date with trends that you can't miss. However, even if you haven't had the opportunity to attend DataMass yet, don't despair. Another opportunity to learn from case studies and solutions from the best in the Big Data field is fast approaching. The twin Big Data Technology Warsaw Summit 2023 conference is coming in the spring, and you can already submit presentations for this edition to stand on the stage of one of the largest Big Data events in Europe alongside the best. Submit your presentation here: Call For Presentation Big Data Technology Warsaw. Also follow the conference's profile on LinkedIn so you don't miss out on registration.

Quarantaine project Staying at home is not my particular strong point. But tough times have arrived and everybody needs to change their habits and re…

Read moreKnowledge sharing is one of our main missions. We regularly speak at international conferences, we contribute to open-source technologies, organize…

Read moreSQL language was invented in 1970 and has powered databases for decades. It allows you not only to query the data, but also to modify it easily on the…

Read moreStreaming analytics is no longer just a buzzword—it’s a must-have for modern businesses dealing with dynamic, real-time data. Apache Flink has emerged…

Read moreThe client who needs Data Analytics Platform ING is a global bank with a European base, serving large corporations, multinationals and financial…

Read more2020 was a very tough year for everyone. It was a year full of emotions, constant adoption and transformation - both in our private and professional…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?