Mastering LLMs: 3 Blogs You Need to Read

Large Language Models (LLMs) are at the forefront of technological innovation, transforming industries like e-commerce, cloud computing, and AI-driven…

Read moreStaying at home is not my particular strong point. But tough times have arrived and everybody needs to change their habits and re-organize their time from scratch. So, since I’ve increased the amount of time I spend at home, I wanted to use it to create something that has been in my mind for quite some time. I was wondering if I could create something with new-to-me technology and languages, like during good my old university days when the lecturer threw me into the unknown waters of programming languages, frameworks and approaches. For me, an avid Java developer, such water is Python. I hadn't written anything in this language before and I had used it mainly as a handy console calculator. Besides programming language, I wanted to gain some knowledge about Flink, which was on my horizon for more than a year, but I didn’t have the opportunity to switch to it from Apache Beam. To achieve all that, I needed an interesting problem to tackle.

Fourier analysis converts a signal from its time domain into the frequency domain. If like me, you spend hours staring at visualizations in Winamp/Foobar (when you had even more free time than now), you were watching a product of Fast Fourier Transformation. So, the problem was how to harness Python, Flink and some spices ( like Kafka and Avro ) and convert it into some kind of a spectrum analyzer. So, I had technologies, I had the problem, but these were nothing, without some restrictions.

Everything needed to happen in real-time. I wanted to play some music and at the same time, I wanted to see the output from the FFT in the form of visualizations. The latencies were crucial. I wanted to hear and see the beat at the same time. Let’s go then!

The first thing which had to be done was to sample sound. I created a Python application which used this library to get the sound wave into my application. This (on Linux and Windows) allowed me to sample the loopback interface. What was the data? It was a stream of numbers, sampled at 44100Hz frequency. In the case of stereo sound, in each second the lib returned 88200 (44100 for each channel) doubles representing the sound coming out from my speakers. I needed to decide how many numbers to record at once. How could I to choose the right value? Well, “1” was pure real time - but it would generate 44100 messages every second that would land in Kafka. It felt a little too much. “44100” on the other hand was cool from the throughput point of view ( only “1” message per second ), but it would create a significant delay between the live sound and the processing. “256” sounded perfect. It would create 44100/256=171 messages per second, each with a max delay of 5.8ms. Sounded perfect. So, the sampler was done. Next was a message broker, to distribute the messages to further processing.

Kafka is a very powerful and universal tool. It offers near real-time message distribution, thanks to its clever design and hardware optimization. Sounded like a message broker for real-time data analysis. Actually, one of the sidequests of this project was to measure how fast Kafka is. But, going back to the sampler application, I had data in Python’s arrays, and I had to publish it to Kafka. Apache has Python Avro library. Well… One thing I can say about this library is that you should consider using other libraries! This one is written purely in Python and it was terribly slow. I decided to use https://github.com/fastavro/fastavro library which was much faster than the official one. So, 171 messages per second were landing in Kafka, each one with ~6ms latency compared to the sound coming out from the speakers. So far so good.

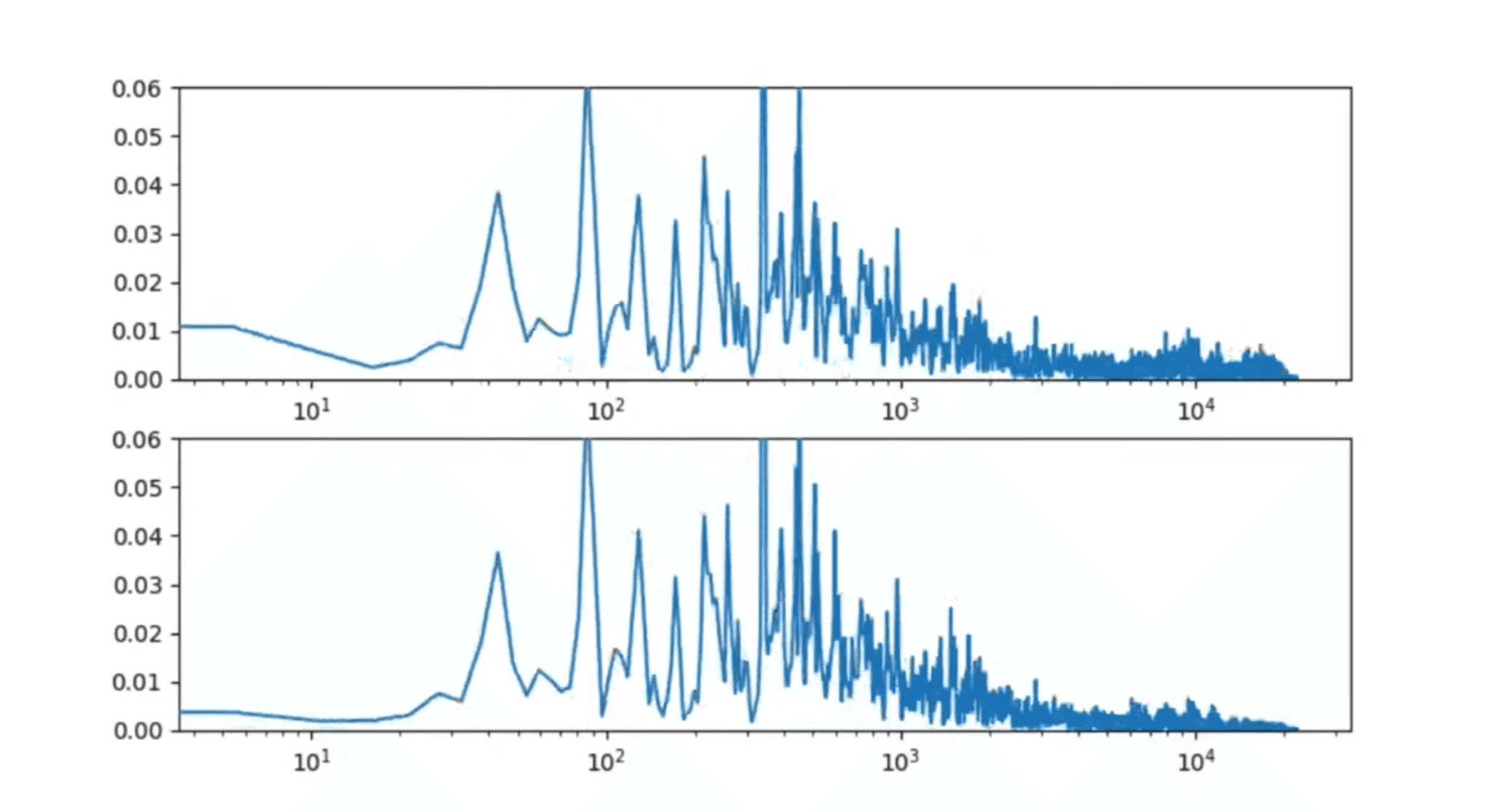

Next in line was Flink. It’s streaming capabilities are well known and proved on the battlefield! Here, the code was written in Java. The Avro data from Kafka was deserialized into Java objects and the actual FFT happened. But there was a problem. Throwing 256 samples into the FFT produced 128 buckets of frequencies. It was far below my expectations when it came to the resolution. A much bigger input was needed. One way to work around this was to create a sliding window of samples. Such an aggregation would need to pre-aggregate samples and emit an 8192 window of the freshest samples every time a new 256 sample packet arrives from Kafka. It had a significant drawback though. Such a large window meant that FFT would produce frequency buckets from the sound of the last 44100Hz / 8192 = 185ms. Pricey, but I decided to go that way.

The FFT produced complex numbers, so after changing it to real ones, the output was serialized to Avro and sent to Kafka once again. On the other side of the pipeline, yet another Python application was waiting to draw these on the chart. Matplotlib is a commonly used tool for plotting various charts. But I didn’t know that it also has some serious animating features. This was really low-hanging fruit. What remained was to get data from Kafka, deserialize it via fastavro and plot.

You can find the output of the whole process here: Quarantaine project: Kafka-Flink audio spectrum analyzer. I was satisfied with the results. Firstly, it works , which is already a great success. Secondly, it works with only a minimal possible delay. And last, but not least: I’ve learned a lot and I’ve tried a lot of new technologies. Moving out from the comfy, well-known tech stack was one of the best things which had happened to me during the last couple of months.

---

PS. If you can see the little 180ms latency on the YouTube video, please consider moving ~60m from your screen and speakers :)

PS2: If you are interested in the code, you can find it here

Large Language Models (LLMs) are at the forefront of technological innovation, transforming industries like e-commerce, cloud computing, and AI-driven…

Read moreStreamlining ML Development: The Impact of Low-Code Platforms Time is often a critical factor that can make or break the success of any endeavor. In…

Read moreIntroduction Many teams may face the question, "Should we use Flink or Kafka Streams when starting a new project with real-time streaming requirements…

Read moreApache NiFi, a big data processing engine with graphical WebUI, was created to give non-programmers the ability to swiftly and codelessly create data…

Read moreApache NiFI, a big data processing engine with graphical WebUI, was created to give non-programmers the ability to swiftly and codelessly create data…

Read moreApache NiFi, a big data processing engine with graphical WebUI, was created to give non-programmers the ability to swiftly and codelessly create data…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?